Collecting the CWGC Data - Part 1

Introduction

This is the first post in a series that explores data collection using R to collect the Commonwealth War Graves Commission (CWGC) dataset.

With the darkness, ‘the melancholy work of the burial of the dead was begun. The special party told off for this work dug graves in High Wood itself, and all the dead who could be found were buried side by side there.’ ‘As one looked on the weary band of tired and muddy comrades who had come to fulfill this last duty to their friend, one felt in a way one seldom does at an ordinary funeral, that … [the dead] really were to be envied, since for them the long-drawn agony of war was at an end.’

Hanson, N. (2019), Unknown Soldiers: The Story of the Missing of the Great War, Lume Books

The CWGC database lists 1,741,972 records, which represents the total number of war dead that the CWGC commemorates. This number is slowly increasing as researchers uncover individuals whose role in the wars had previously been unknown. In addition to searching for an individual by name, the website offers a number of advanced search terms that may be used to refine the search. These terms include:

- War (WWI or WWII)

- Country served with (predominantly Commonwealth countries)

- Cemetery or memorial

- Country of commemoration (over 150 countries)

- Branch of service (Army, Air Force, Navy…)

- Date of death

- Age at death

- Unit (Regiment, Squadron, Ship…)

- Rank (Private, Corporal…)

- Honours & Awards (DFC, MM, VC, DSO…)

My initial plan was to write a script that would scrape the data using the webpage search terms, and to do this I needed to figure out which of the terms would get me all the data the most efficiently. By default, the results are paginated up to 500 pages, each with 20 records, for a maximum of 10,000 records. This meant that I needed a set of search terms that would generate sets of results that did not individually exceed 10,000 records. With this in mind, trying to split the results by War, Country Served With, Country of Commemoration and Branch of Service was not going to work. The Age at Death term was not that helpful either, since there were more than 500,000 18 year-olds killed in each war. Furthermore, a quick scan through the results from some random searches showed a lot of this data field was missing. I briefly considered searching by Cemetery and Memorial but a quick check showed the Thiepval memorial has over 70,000 names on it.

The first day of the battle of the Somme killed almost 20,000 soldiers, so splitting by Date of Death could also be ruled out. I explored this a bit further and a search for WWI, Army, Served with UK, Commemorated on Thiepval Memorial, Killed on 01/07/1916 showed 12,408 names - a truly horrific number. This was certainly an exceptional case but it was clear that the script would have to use multiple search terms to get below the threshold of 10,000 records. And even supposing the results were bundled neatly into sets of 10,000 records, scraping 1,700,000 records would require accessing 17,000 URLs. This raised an ethical question about web scraping - how many requests are reasonable before the scraping activity potentially affects the performance of the website? Scraping also runs the risk of being identified as a DoS attack, which could lead to your access to the website being blocked at best, or a criminal prosecution at worst.

So after digging around the website and some initial coding, I decided not to proceed with a fully automated script. Fortunately, the website offers a download option which outputs the results in a spreadsheet, which is limited to a maximum of 80,000 rows. This was not my preferred approach, but it still left a lot of scope to demonstrate some useful scraping methods. Equally, you have to ask yourself why you would spend a lot of time coding a complex solution when a simpler approach will suffice?

Software Tools

If you want execute the code for yourself exactly as I have, you will need:

- a recent version of the R language

- a development environment like Rstudio

- a web browser such as Google Chrome

You should be reasonably familiar with R and have a basic understanding of HTML and CSS.

Installing R Packages

This project uses the rvest package for the web scraping functions, and some other standard packages for data manipulation and visualization. R packages can be installed using:

install.packages("packagename")Once installed, packages can then be loaded with:

library(packagename)If you tend to write code on multiple machines as I do, it can become a bit confusing to remember which packages are installed on which machine. Whilst this is easy to fix, a simple way around this is to use the following code snippet at the start of your work. Just add in the required packages, and it will install and load them as required.

# Install and load required R packages -----------------------------

# List of required packages

list.of.packages <- c("rvest", # Web scraping

"data.table", # Summarise data

"tidyr", # Tidy data

"stringr", # String manipulation

"plotly") # Visualisations

# Create list of required new packages

new.packages <- list.of.packages[!(list.of.packages %in%

installed.packages()[,"Package"])]

# If non-empty list is returned, install packages

if(length(new.packages)) install.packages(new.packages)

# Load packages

lapply(list.of.packages, function(x){library(x, character.only = TRUE)}) Figure 1. Commonwealth cemetery in Krakow, Poland. August 16, 2014.

Figure 1. Commonwealth cemetery in Krakow, Poland. August 16, 2014.

List of Countries With Memorials

As part of the initial exploration, I wanted to get a breakdown of the number of war dead commemorated in each country. To do this, I looped through each country and retrieved the number of records for each. The list of countries with graves and memorials was extracted from a drop-down element on the webpage.

First, I defined the main URLs to be scraped:

# URLs to be scraped

memorial_url <- "https://www.cwgc.org/find/find-cemeteries-and-memorials"

war_dead_url <- "https://www.cwgc.org/find/find-war-dead"The contents of the memorial webpage was retrieved using the read_html() function, which returns an XML document.

# Read contents of webpage into XML document

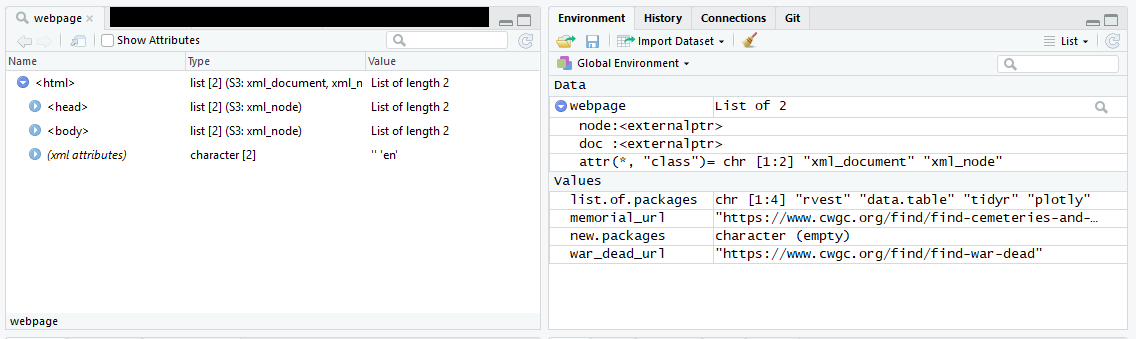

webpage <- read_html(memorial_url)When trying new functions, I tend to check the class of the objects returned by my code. In my experience, unexpected results are often caused by incorrectly applying functions to the wrong object class.

# Show class of object

class(webpage)## [1] "xml_document" "xml_node"Normally the Environment panel in Rstudio is quite useful for inspecting data objects in the work-space. However, in the case of an XML object, it’s not that helpful and the standard View() command is a bit hit-and-miss. On my laptop I found that the View() command did not work for an XML object, but it did work on my main PC (this may have to do with some differences in package versions). An alternative is to use the xml_structure() function, but the output can be cumbersome to read in the console.

# View object

View(webpage)

# or use

xml_structure(webpage) Figure 2. View contents of XML object in Rstudio

Figure 2. View contents of XML object in Rstudio

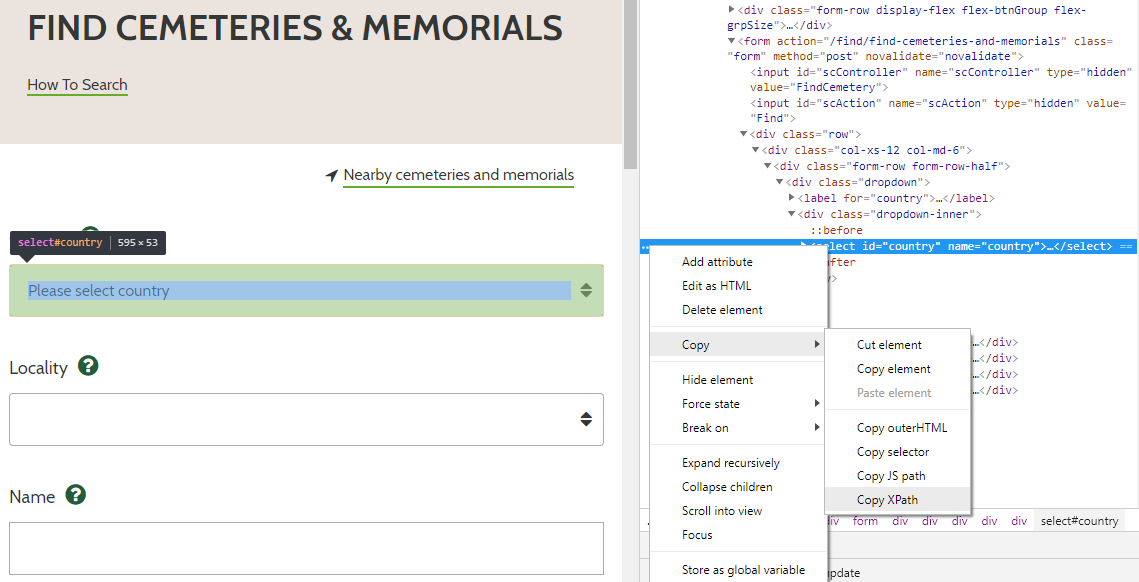

The list of countries where graves or memorials are found can be retrieved from the Country drop-down box on the search form. To reference this element, one can use either the HTML Id value or the XPath. In Chrome, you can right-click the element and choose ‘Inspect’ or use ‘Ctrl-Shift-I’. This opens up Chrome Dev Tools, where you can see the HTML elements and associated tags. The drop-down element has id = “country”, or alternatively you can copy the XPath by right-clicking the element and choosing ‘Copy XPath’.

Figure 3. HTML element Id and XPath from https://www.cwgc.org

Figure 3. HTML element Id and XPath from https://www.cwgc.org

The contents of the element can be retrieved by using the html_node() function in conjunction with the relevant CSS selector or the XPath. If using the XPath copied from Chrome Dev Tools, change the double quotes around “country” to single quotes i.e. ‘country’.

# Get contents of HTML element with CSS option

commemorated_data_html <- html_node(webpage, "#country")

# Get contents of HTML element with XPath option

commemorated_data_html <- html_node(webpage, xpath = "//*[@id ='country']")Using class() shows that the output is an XML node object.

# Show class of object

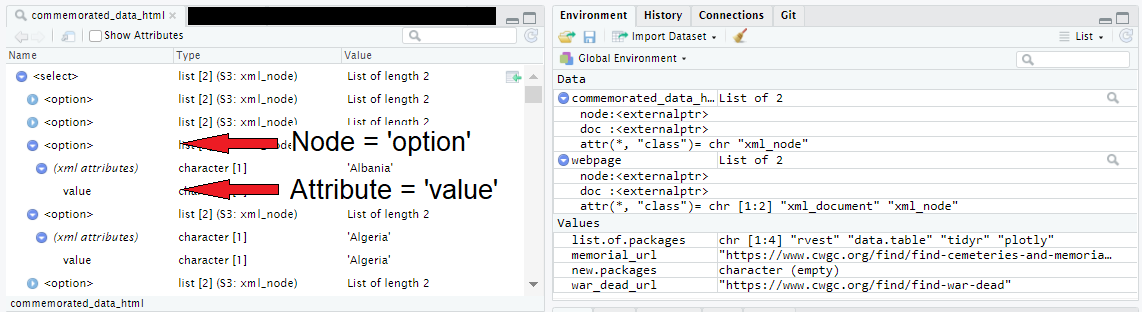

class(commemorated_data_html)## [1] "xml_missing" Figure 4. RStudio view of HTML node structure

Figure 4. RStudio view of HTML node structure

The nodes are labelled ‘option’, and each has an xml attribute labelled ‘value’. The xml attributes contain the list of countries that we are trying to extract. These are retrieved using the html_attr() method.

# Select the html nodes - note the plural 'html_nodes'

commemorated_data <- html_nodes(commemorated_data_html, "option")

# Get the XML attributes from the nodes

commemorated_data <- html_attr(commemorated_data, "value")All that remains is to tidy up the list by removing the first two elements ("" and “all”):

# Clean list

commemorated_data <- commemorated_data[3:length(commemorated_data)]And finally, the list of countries is:

# First 20 countries in which memorials are located

commemorated_data[1:20]## [1] NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NASave the results for later use:

# Save results

save(commemorated_data, file = "/path/to/your/files/countries_commemorated.rda")Conclusion

This post has discussed the initial exploration of the data source and has introduced the tools and packages that will be used. It also showed how to retrieve a list of all the countries that have memorials and graves administered by the CWGC.

Using the list of countries, the next post will show how to automatically extract the number of graves in each country.